k8s-7: kafka+zookeeper的单节点和集群的持久化

之前在k8s环境中有这个需求,看了好多的文档,都有坑,踩了一边总结一下,需要的朋友可自取

一、单节点部署

建议开发环境使用,且此处采用动态挂载的,生产不建议

1、安装zk

-

cat > zk.yaml <<EOF

-

apiVersion: v1

-

kind: Service

-

metadata:

-

labels:

-

app: zookeeper-service

-

name: zookeeper-service

-

spec:

-

ports:

-

- name: zookeeper-port

-

port: 2181

-

targetPort: 2181

-

selector:

-

app: zookeeper

-

---

-

apiVersion: apps/v1

-

kind: Deployment

-

metadata:

-

labels:

-

app: zookeeper

-

name: zookeeper

-

spec:

-

replicas: 1

-

selector:

-

matchLabels:

-

app: zookeeper

-

template:

-

metadata:

-

labels:

-

app: zookeeper

-

spec:

-

containers:

-

- image: wurstmeister/zookeeper

-

imagePullPolicy: IfNotPresent

-

name: zookeeper

-

ports:

-

- containerPort: 2181

-

EOF

2.安装kafka

-

cat > kafka.yaml <<EOF

-

apiVersion: v1

-

kind: Service

-

metadata:

-

name: kafka-service

-

labels:

-

app: kafka

-

spec:

-

type: NodePort

-

ports:

-

- port: 9092

-

name: kafka-port

-

targetPort: 9092

-

nodePort: 30092

-

protocol: TCP

-

selector:

-

app: kafka

-

-

---

-

-

apiVersion: apps/v1

-

kind: Deployment

-

metadata:

-

name: kafka

-

labels:

-

app: kafka

-

spec:

-

replicas: 1

-

selector:

-

matchLabels:

-

app: kafka

-

template:

-

metadata:

-

labels:

-

app: kafka

-

spec:

-

containers:

-

- name: kafka

-

image: wurstmeister/kafka

-

imagePullPolicy: IfNotPresent

-

ports:

-

- containerPort: 9092

-

env:

-

- name: KAFKA_ADVERTISED_PORT

-

value: "9092"

-

- name: KAFKA_ADVERTISED_HOST_NAME

-

value: "kafka-service" #[kafka的service的clusterIP]

-

- name: KAFKA_ZOOKEEPER_CONNECT

-

value: zookeeper-service:2181

-

- name: KAFKA_BROKER_ID

-

value: "1"

-

EOF

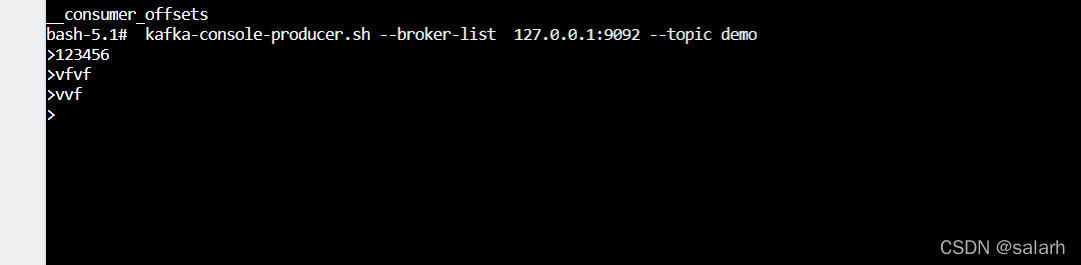

3.验证

进入容器 创建生产者消费者测试

生产者:

-

kafka-console-producer.sh --broker-list 127.0.0.1:9092 --topic demo

-

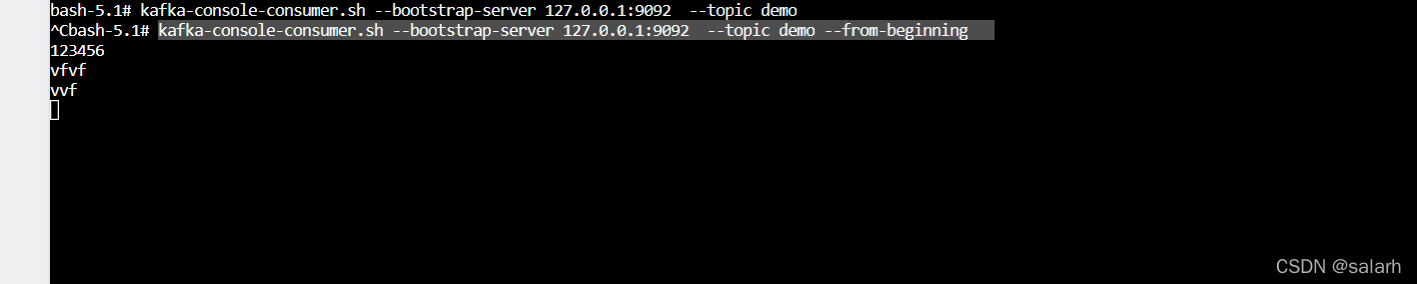

消费者:

kafka-console-consumer.sh --bootstrap-server 127.0.0.1:9092 --topic demo --from-beginning

二、集群部署

这里是生产使用的。直接采用pv-pvc的挂载方式

1.zook的pv-pvc.yaml

-

kind: PersistentVolume

-

apiVersion: v1

-

metadata:

-

name: k8s-pv-zk0

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-zook"

-

labels:

-

type: local

-

spec:

-

capacity:

-

storage: 5Gi

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.XX.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-zookeeper/pv0"

-

---

-

kind: PersistentVolume

-

apiVersion: v1

-

metadata:

-

name: k8s-pv-zk1

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-zook"

-

labels:

-

type: local

-

spec:

-

capacity:

-

storage: 5Gi

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.XX.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-zookeeper/pv1"

-

---

-

kind: PersistentVolume

-

apiVersion: v1

-

metadata:

-

name: k8s-pv-zk2

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-zook"

-

labels:

-

type: local

-

spec:

-

capacity:

-

storage: 5Gi

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.XX.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-zookeeper/pv2"

2.zk的all.yaml

-

---

-

apiVersion: v1

-

kind: Service

-

metadata:

-

name: zk-hs

-

labels:

-

app: zk

-

spec:

-

ports:

-

- port: 2888

-

name: server

-

- port: 3888

-

name: leader-election

-

clusterIP: None

-

selector:

-

app: zk

-

---

-

apiVersion: v1

-

kind: Service

-

metadata:

-

name: zk-cs

-

labels:

-

app: zk

-

spec:

-

type: NodePort

-

ports:

-

- port: 2181

-

name: client

-

selector:

-

app: zk

-

---

-

apiVersion: policy/v1beta1

-

kind: PodDisruptionBudget

-

metadata:

-

name: zk-pdb

-

spec:

-

selector:

-

matchLabels:

-

app: zk

-

maxUnavailable: 1

-

---

-

apiVersion: apps/v1

-

kind: StatefulSet

-

metadata:

-

name: zk

-

spec:

-

selector:

-

matchLabels:

-

app: zk

-

serviceName: zk-hs

-

replicas: 3

-

updateStrategy:

-

type: RollingUpdate

-

podManagementPolicy: Parallel

-

template:

-

metadata:

-

labels:

-

app: zk

-

spec:

-

affinity:

-

podAntiAffinity:

-

requiredDuringSchedulingIgnoredDuringExecution:

-

- labelSelector:

-

matchExpressions:

-

- key: "app"

-

operator: In

-

values:

-

- zk

-

topologyKey: "kubernetes.io/hostname"

-

containers:

-

- name: kubernetes-zookeeper

-

imagePullPolicy: Always

-

image: "mirror谷歌containers/kubernetes-zookeeper:1.0-3.4.10"

-

resources:

-

requests:

-

memory: "1Gi"

-

cpu: "0.5"

-

ports:

-

- containerPort: 2181

-

name: client

-

- containerPort: 2888

-

name: server

-

- containerPort: 3888

-

name: leader-election

-

command:

-

- sh

-

- -c

-

- "start-zookeeper \

-

--servers=3 \

-

--data_dir=/var/lib/zookeeper/data \

-

--data_log_dir=/var/lib/zookeeper/data/log \

-

--conf_dir=/opt/zookeeper/conf \

-

--client_port=2181 \

-

--election_port=3888 \

-

--server_port=2888 \

-

--tick_time=2000 \

-

--init_limit=10 \

-

--sync_limit=5 \

-

--heap=512M \

-

--max_client_cnxns=60 \

-

--snap_retain_count=3 \

-

--purge_interval=12 \

-

--max_session_timeout=40000 \

-

--min_session_timeout=4000 \

-

--log_level=INFO"

-

readinessProbe:

-

exec:

-

command:

-

- sh

-

- -c

-

- "zookeeper-ready 2181"

-

initialDelaySeconds: 10

-

timeoutSeconds: 5

-

livenessProbe:

-

exec:

-

command:

-

- sh

-

- -c

-

- "zookeeper-ready 2181"

-

initialDelaySeconds: 10

-

timeoutSeconds: 5

-

volumeMounts:

-

- name: datadir

-

mountPath: /var/lib/zookeeper

-

securityContext:

-

runAsUser: 1000

-

fsGroup: 1000

-

volumeClaimTemplates:

-

- metadata:

-

name: datadir

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-zook"

-

spec:

-

accessModes: [ "ReadWriteOnce" ]

-

resources:

-

requests:

-

storage: 5Gi

3.kafka的pv-pvc

-

apiVersion: v1

-

kind: PersistentVolume

-

metadata:

-

name: k8s-pv-kafka0

-

namespace: tools

-

labels:

-

app: kafka

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-kafka"

-

spec:

-

capacity:

-

storage: 5G

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.XX.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-kafk/pv0"

-

---

-

apiVersion: v1

-

kind: PersistentVolume

-

metadata:

-

name: k8s-pv-kafka1

-

namespace: tools

-

labels:

-

app: kafka

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-kafka"

-

spec:

-

capacity:

-

storage: 5G

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.XX.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-kafka/pv1"

-

---

-

apiVersion: v1

-

kind: PersistentVolume

-

metadata:

-

name: k8s-pv-kafka2

-

namespace: tools

-

labels:

-

app: kafka

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-kafka"

-

spec:

-

capacity:

-

storage: 5G

-

accessModes:

-

- ReadWriteOnce

-

nfs:

-

server: 192.168.xx.XX

-

path: "/mnt/nas/zmj_pord/nfs/wms-kafka/pv2"

4.kafka的all.yaml

(这里要把健康检查#掉)

-

---

-

apiVersion: v1

-

kind: Service

-

metadata:

-

name: kafka-hs

-

labels:

-

app: kafka

-

spec:

-

ports:

-

- port: 9092

-

name: server

-

clusterIP: None

-

selector:

-

app: kafka

-

---

-

apiVersion: v1

-

kind: Service

-

metadata:

-

name: kafka-cs

-

labels:

-

app: kafka

-

spec:

-

selector:

-

app: kafka

-

type: NodePort

-

ports:

-

- name: client

-

port: 9092

-

# nodePort: 19092

-

---

-

apiVersion: policy/v1beta1

-

kind: PodDisruptionBudget

-

metadata:

-

name: kafka-pdb

-

spec:

-

selector:

-

matchLabels:

-

app: kafka

-

minAvailable: 2

-

---

-

apiVersion: apps/v1

-

kind: StatefulSet

-

metadata:

-

name: kafka

-

spec:

-

serviceName: kafka-hs

-

replicas: 3

-

selector:

-

matchLabels:

-

app: kafka

-

template:

-

metadata:

-

labels:

-

app: kafka

-

spec:

-

affinity:

-

podAntiAffinity:

-

requiredDuringSchedulingIgnoredDuringExecution:

-

- labelSelector:

-

matchExpressions:

-

- key: "app"

-

operator: In

-

values:

-

- kafka

-

topologyKey: "kubernetes.io/hostname"

-

podAffinity:

-

preferredDuringSchedulingIgnoredDuringExecution:

-

- weight: 1

-

podAffinityTerm:

-

labelSelector:

-

matchExpressions:

-

- key: "app"

-

operator: In

-

values:

-

- zk

-

topologyKey: "kubernetes.io/hostname"

-

terminationGracePeriodSeconds: 300

-

containers:

-

- name: kafka

-

# imagePullPolicy: IfNotPresent

-

image: registry.cn-hangzhou.aliyuncs.com/jaxzhai/k8skafka:v1

-

#image: wurstmeister/kafka

-

command: [ "/bin/bash", "-ce", "tail -f /dev/null" ]

-

resources:

-

requests:

-

memory: "200M"

-

cpu: 500m

-

ports:

-

- containerPort: 9092

-

name: server

-

command:

-

- sh

-

- -c

-

- "exec kafka-server-start.sh /opt/kafka/config/server.properties --override broker.id=${HOSTNAME##*-} \

-

--override listeners=PLAINTEXT://:9092 \

-

--override zookeeper.connect=zk-0.zk-hs.prod-zmj-wms.svc.cluster.local:2181,zk-1.zk-hs.prod-zmj-wms.svc.cluster.local:2181,zk-2.zk-hs.prod-zmj-wms.svc.cluster.local:2181 \

-

--override log.dir=/var/lib/kafka \

-

--override auto.create.topics.enable=true \

-

--override auto.leader.rebalance.enable=true \

-

--override background.threads=10 \

-

--override compression.type=producer \

-

--override delete.topic.enable=true \

-

--override leader.imbalance.check.interval.seconds=300 \

-

--override leader.imbalance.per.broker.percentage=10 \

-

--override log.flush.interval.messages=9223372036854775807 \

-

--override log.flush.offset.checkpoint.interval.ms=60000 \

-

--override log.flush.scheduler.interval.ms=9223372036854775807 \

-

--override log.retention.bytes=-1 \

-

--override log.retention.hours=168 \

-

--override log.roll.hours=168 \

-

--override log.roll.jitter.hours=0 \

-

--override log.segment.bytes=1073741824 \

-

--override log.segment.delete.delay.ms=60000 \

-

--override message.max.bytes=1000012 \

-

--override min.insync.replicas=1 \

-

--override num.io.threads=8 \

-

--override num.network.threads=3 \

-

--override num.recovery.threads.per.data.dir=1 \

-

--override num.replica.fetchers=1 \

-

--override offset.metadata.max.bytes=4096 \

-

--override offsets.commit.required.acks=-1 \

-

--override offsets.commit.timeout.ms=5000 \

-

--override offsets.load.buffer.size=5242880 \

-

--override offsets.retention.check.interval.ms=600000 \

-

--override offsets.retention.minutes=1440 \

-

--override offsets.topic.compression.codec=0 \

-

--override offsets.topic.num.partitions=50 \

-

--override offsets.topic.replication.factor=3 \

-

--override offsets.topic.segment.bytes=104857600 \

-

--override queued.max.requests=500 \

-

--override quota.consumer.default=9223372036854775807 \

-

--override quota.producer.default=9223372036854775807 \

-

--override replica.fetch.min.bytes=1 \

-

--override replica.fetch.wait.max.ms=500 \

-

--override replica.high.watermark.checkpoint.interval.ms=5000 \

-

--override replica.lag.time.max.ms=10000 \

-

--override replica.socket.receive.buffer.bytes=65536 \

-

--override replica.socket.timeout.ms=30000 \

-

--override request.timeout.ms=30000 \

-

--override socket.receive.buffer.bytes=102400 \

-

--override socket.request.max.bytes=104857600 \

-

--override socket.send.buffer.bytes=102400 \

-

--override unclean.leader.election.enable=true \

-

--override zookeeper.session.timeout.ms=6000 \

-

--override zookeeper.set.acl=false \

-

--override broker.id.generation.enable=true \

-

--override connections.max.idle.ms=600000 \

-

--override controlled.shutdown.enable=true \

-

--override controlled.shutdown.max.retries=3 \

-

--override controlled.shutdown.retry.backoff.ms=5000 \

-

--override controller.socket.timeout.ms=30000 \

-

--override default.replication.factor=1 \

-

--override fetch.purgatory.purge.interval.requests=1000 \

-

--override group.max.session.timeout.ms=300000 \

-

--override group.min.session.timeout.ms=6000 \

-

--override inter.broker.protocol.version=0.10.2-IV0 \

-

--override log.cleaner.backoff.ms=15000 \

-

--override log.cleaner.dedupe.buffer.size=134217728 \

-

--override log.cleaner.delete.retention.ms=86400000 \

-

--override log.cleaner.enable=true \

-

--override log.cleaner.io.buffer.load.factor=0.9 \

-

--override log.cleaner.io.buffer.size=524288 \

-

--override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \

-

--override log.cleaner.min.cleanable.ratio=0.5 \

-

--override log.cleaner.min.compaction.lag.ms=0 \

-

--override log.cleaner.threads=1 \

-

--override log.cleanup.policy=delete \

-

--override log.index.interval.bytes=4096 \

-

--override log.index.size.max.bytes=10485760 \

-

--override log.message.timestamp.difference.max.ms=9223372036854775807 \

-

--override log.message.timestamp.type=CreateTime \

-

--override log.preallocate=false \

-

--override log.retention.check.interval.ms=300000 \

-

--override max.connections.per.ip=2147483647 \

-

--override num.partitions=1 \

-

--override producer.purgatory.purge.interval.requests=1000 \

-

--override replica.fetch.backoff.ms=1000 \

-

--override replica.fetch.max.bytes=1048576 \

-

--override replica.fetch.response.max.bytes=10485760 \

-

--override reserved.broker.max.id=1000 "

-

env:

-

- name: KAFKA_HEAP_OPTS

-

value : "-Xmx300M -Xms200M"

-

- name: KAFKA_OPTS

-

value: "-Dlogging.level=INFO"

-

-

volumeMounts:

-

- name: datadir

-

mountPath: /var/lib/kafka

-

readinessProbe:

-

tcpSocket:

-

port: 9092

-

timeoutSeconds: 5

-

initialDelaySeconds: 20

-

# exec:

-

# command:

-

# - sh

-

# - -c

-

# - "/opt/kafka/bin/kafka-broker-api-versions.sh --bootstrap-server=0.0.0.0:9092"

-

securityContext:

-

runAsUser: 1000

-

fsGroup: 1000

-

volumeClaimTemplates:

-

- metadata:

-

name: datadir

-

annotations:

-

volume.beta.kubernetes.io/storage-class: "wms-kafka"

-

spec:

-

accessModes: [ "ReadWriteOnce" ]

-

resources:

-

requests:

-

storage: 5G

5.测试

略,参考单节点

这篇好文章是转载于:学新通技术网

- 版权申明: 本站部分内容来自互联网,仅供学习及演示用,请勿用于商业和其他非法用途。如果侵犯了您的权益请与我们联系,请提供相关证据及您的身份证明,我们将在收到邮件后48小时内删除。

- 本站站名: 学新通技术网

- 本文地址: /boutique/detail/tanhgcgifk

系列文章

更多

同类精品

更多

-

photoshop保存的图片太大微信发不了怎么办

PHP中文网 06-15 -

《学习通》视频自动暂停处理方法

HelloWorld317 07-05 -

Android 11 保存文件到外部存储,并分享文件

Luke 10-12 -

word里面弄一个表格后上面的标题会跑到下面怎么办

PHP中文网 06-20 -

photoshop扩展功能面板显示灰色怎么办

PHP中文网 06-14 -

微信公众号没有声音提示怎么办

PHP中文网 03-31 -

excel下划线不显示怎么办

PHP中文网 06-23 -

excel打印预览压线压字怎么办

PHP中文网 06-22 -

TikTok加速器哪个好免费的TK加速器推荐

TK小达人 10-01 -

怎样阻止微信小程序自动打开

PHP中文网 06-13